Before an organization selects specific technology and service solutions, they first need to contemplate a complete enumeration of imperative or critical business functions/services and what threats exist to resiliency of those functions/services. The adoption of a strategy to combat those threats may not mean selecting a specific tool but rather may mean adopting a selected course of enterprise action, behavior, or belief that would lead to operational and tactical tool choices.

Zero Trust Model

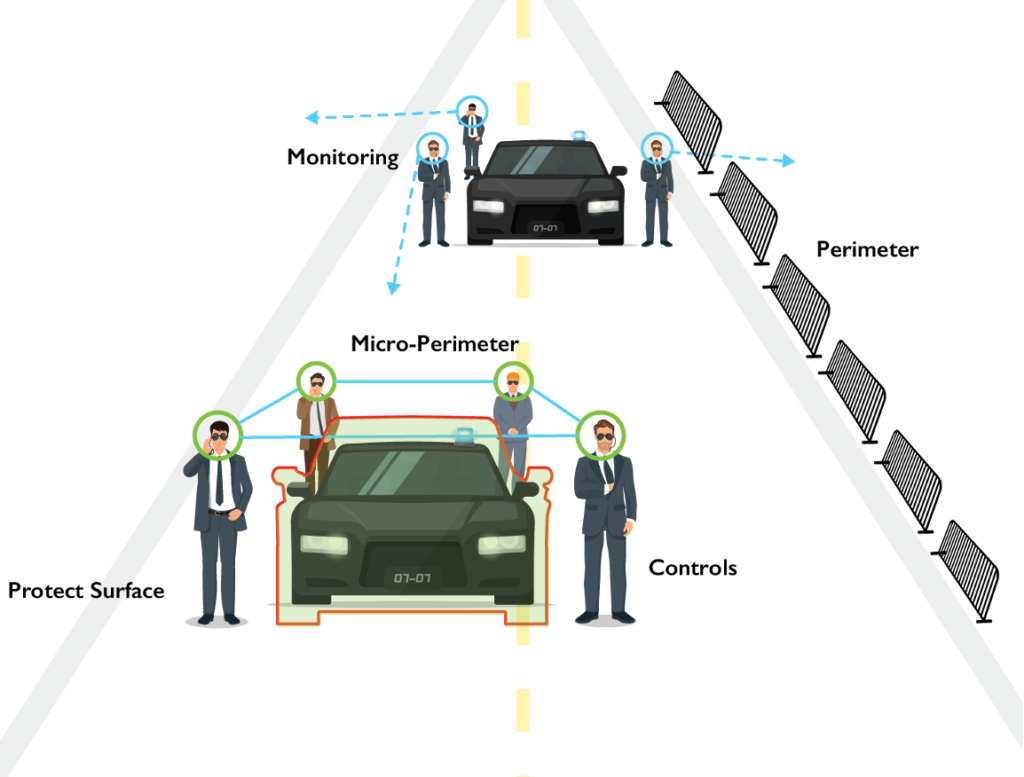

Adopting a Zero Trust Model (as proselytized by John Kindervag, the creator of the Zero Trust Model) for public cloud consumption is one way in which to develop a strategy for countermeasures to the cloud-related threats. Mr. Kindervag opines, “Trust is a human emotion that we have mistakenly injected into digital systems,” and since trust is a vulnerability it is the crux of most compromise.

It is the anthropomorphism of networks that is the problem. The working practice of the Zero Trust Model is to never trust!

Five Steps to Zero Trust

- Identify your protect surface

- Map the data flows for your sensitive data

- Architect your Zero Trust environment

- Create your automated rule base

- Continuously monitor your Zero Trust ecosystem

In this model information security practices such as isolation are applied throughout the entire data center and other connected ecosystems. Data center security hygiene should encompass least privilege technical controls and need-to-know administrative mandates that would precede technical implementation.

Each organization has its bespoke protect surface.

Micro-segmentation

The toolsets of current adversaries are polymorphic in nature and allow threats to bypass static security controls. Modern cyberattacks take advantage of traditional security models to move easily between systems within a data center.

Micro-segmentation is a principal design and activity of the Zero Trust Model, which aids in protecting against dynamic threats. A fundamental design requirement of micro-segmentation is to understand the protection requirements for east-west (traffic within a data center) and north-south (traffic to and from the internet) traffic flows.

IP Flow Information Export Protocol (IPFIX) may be used to determine the nature of the traffic to uncover inefficiencies and vulnerabilities. The objective of reading the output of the traffic flows is to identify patterns and relationships that lead to creating granular policy schemes for specific workloads. A single data center may have workloads that contain lists of hundreds or thousands.

Traditional data center segmentation was along levels of trust between a DMZ, application logic (web), and business logic (database tiers). The traditional segmentation may not address the performance efficiencies or security needed today.

When organizations avoid infrastructure-centric design paradigms, they are more likely to become more efficient in service delivery in the data center and become apt at detecting and preventing advanced persistent threats. Advanced security service insertion and service chaining are ways of evolving from physically bound protection mechanisms and designing and calling upon a specific service (even multiple third-party protection services like virtual IDS/IPS tools) across VM instances or other virtual services when and where needed. This type of lateral movement across east-west services aids unnecessary hindrance of traffic flow performance in countermeasure design.

Traditional segmentation, which is infrastructure centric, does not have the granularity to properly address least-privilege insider needs and stealthy outsider threats. Traditional segmentation is not nuanced, but rather zones of defense that assume all traffic types and threats will be contained in their appropriate zones. Micro-segmentation pays closer attention to traffic types (east-west, north-south) and creates policies that address specific protection surfaces.